The black art of platform conversions: The release candidate

Sponsored article: Abstraction Games details the penultimate stage of the adaptation process -- the release candidate

In our previous article we covered the "tailored build" stage where we explained the challenges of turning a straightforward port into an adaptation that is properly tailored to the target platform as players have come to expect. In this article we will talk about the penultimate stage of the adaptation process: the release candidate.

The goal of the release candidate is to deliver a build that is ready for first-party submission. For this, the build needs to be fine-tuned for the new platform, compliant to all technical requirements of the platform, and free of any major bugs. Let's talk about fine-tuning first.

Fine-tuning

Some of the most common areas for fine-tuning are the loading times and memory footprint of the game. These are areas where a PC can have a noticeable advantage over consoles. Games, especially smaller PC only titles, often do not need to pay a lot of attention to the amount of memory that they use, or the time it takes to load a map. Most game PCs these days have fast hard drives or SSDs, and a lot more internal memory than is available on the current generation of console platforms.

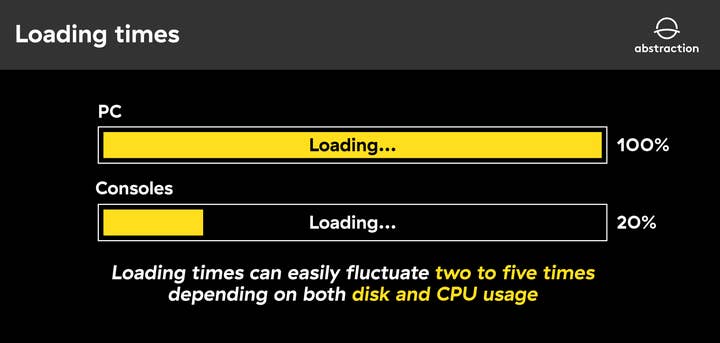

Loading times can easily fluctuate two to five times depending on both disk and CPU usage. While 30 seconds is probably fine for a PC game, one-to-two-minute loading times will not provide a great player experience.

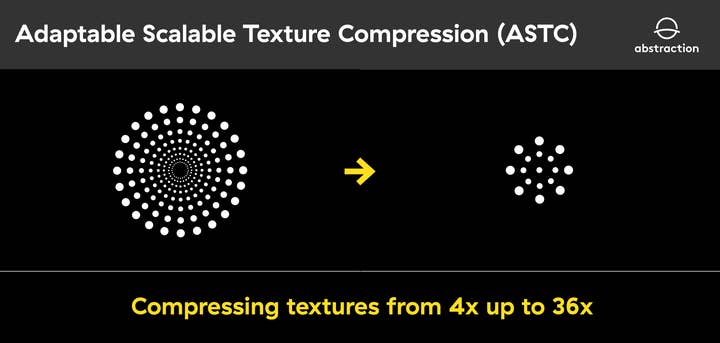

Textures, sound and music assets are often big culprits when it comes to memory usage and can indirectly affect loading times. A 'quick and easy' way to reduce both loading time and memory footprint is to convert those assets to a compressed format, ideally ones that are natively supported on the platform.

An example of this is Adaptable Scalable Texture Compression (ASTC) that the Nintendo Switch natively supports, which can compress textures from four times up to an impressive 36 times. Most of these are lossy compression schemes though, which means there is always some loss of quality.

Another effective way to improve both loading times and memory usage is asset streaming. For Totally Reliable Delivery Service, which uses the Unity engine, enabling texture mipmap streaming eventually saved us over 30 seconds of loading time. This would basically cause higher detail levels for textures to only load when they appear on a large enough area of the screen.

But it is not always this easy. For other titles, we had to take streaming a lot further to bring down the loading times. For ARK and PixARK, we had to extend Unreal Engine 4 with custom functionality of our own design to support both static and skeletal mesh LOD streaming as well as skeletal mesh animation streaming. We also rewrote the texture mip streaming to have a fixed memory budget and implemented Opus audio streaming to make use of the hardware audio decoding features of the Nintendo Switch.

Much of the work needed for streaming can be handled asynchronously, but it does come with a runtime cost that can certainly negatively impact the frame rate

While it did reduce memory usage, these changes were still not enough. It is a common issue in Unreal Engine 4 titles to have 'hard' references between dependent assets, which causes a big dependency chain of assets that all need to get loaded. Fixing this dependency chain would mean changing original content, and for an adaptation changing a lot of original content is not always an option, especially if you consider future DLC or content patches. In general, content tends to be extremely hard to merge correctly. To address having so many active assets due to all these dependencies, we added support for going below the minimum LOD levels for meshes and textures and only allowed to load any of these LOD levels if an asset was actually actively in use by the game.

Much of the work needed for streaming can be handled asynchronously, but it does come with a runtime cost that can certainly negatively impact the frame rate. Streaming often receives a certain time budget per frame to keep this impact both minimal and predictable.

Even for the next console generation, this can still be something to pay attention to. While they provide extremely fast solid-state disks, it could still mean a lot of work needs to be put into adding or improving streaming solutions for higher quality assets.

Below are a few examples of the extra effort that went into fine-tuning ARK and PixARK for consoles.

- PixARK/ARK - Saving and loading optimizations

By Eric Troost & Ross Martin

When we first started working on PixARK, the game was using SQLite to store its voxel terrain database. This database was performing a lot of I/O operations and was quite inefficient at what it was doing. The I/O operations especially were an issue for us, as consoles have limits on the allowed number of reads and writes per minute.

To address this, we decided to remove the SQL database entirely and instead use a custom binary format to store the voxel data. Doing so gave us a lot more control over when we wanted to perform I/O operations since we now had the ability to store changes into our custom database without immediately saving it to disk. This also helped with performance, since the operations we were doing in our custom database were very cheap.

A second issue was that the size of the voxel database would grow very large as more chunks of the world were explored. To fix this, we decided to only store voxel changes in the database instead of across the entire terrain. We could then use the seed of the world to generate the original map and then apply all the changes from the database.

We later identified some major performance hitches whenever we saved world objects. After some investigation, we found that if a user had explored a lot of the world, it would cause thousands of persistent objects to be generated, which would then be picked up by the saving system. The saving system at the time would just iterate through all the necessary objects and serialize them sequentially on the main thread.

We ended up rewriting the saving system so that it would save objects that we considered critical in the first frame and the rest of the objects over multiple frames. Objects that we deemed critical were objects that contained inventories and such functionality that the player could actively interact with. The save system would use all the time it could in a frame without causing the game to drop below 30 fps while also using a minimum amount of time to at least guarantee that objects were saved. With the implementation of the new saving system, we removed all the hitches related to saving regardless of how much of the world was explored.

The previous example was about a game made in Unreal 4. When a game is built in Unity on the other hand, the post-process pipeline is often a good place to fine-tune the assets for optimal performance, as described below.

- The Sexy Brutale

By Wouter van Dongen

Porting of The Sexy Brutale happened while the title was still in development. We were often merging back and forth with the original developer's codebase. Due to the binary nature of most assets in Unity, we tried to avoid merging these at all costs.

Merging frequently helped a lot during the general adaptation and certification work. Many of the game's issues could be resolved by almost painlessly merging back and forth with the developer's codebase.

The big downside to porting a game still in development is that assets are still being worked on during the process and may increase loading times and lower frame rates. Therefore, optimizing the game is often postponed to the very end as it's only then that you know what you are really dealing with.

The big downside to porting a game still in development is that assets are still being worked on and may increase loading times and lower frame rates

We were faced with the challenge of optimizing the game for consoles, which ran at ~15 fps at the time, to run 30+ fps and have seamless transitions between the game rooms.

Profiling showed that we were draw-call bound in most of the scenes. To address this, we added a step to the Unity build pipeline to merge meshes with identical materials that reside in the same room. Soon we started adding more and more steps, as we identified more opportunities to optimize how the assets were set up and used. It turned out that a lot of materials were duplicated. Deduplication caused yet again more meshes to be merged. We found that the same was true for textures and shaders.

Now, while this may sound easy and straightforward, it is often not the case. Making builds and cooking content can take hours and is often done after office hours. So, you only really know if everything worked out the next morning. A day could easily be lost due to a stupid mistake that caused the build to fail.

These content optimizations also improved loading times. But not enough for the room transitions to be seamless. The scenes were set up in such a way that they each contained the connected rooms. So, a scene with three doors would contain four rooms. The room itself and the rooms the doors would lead to. When rushing from room to room, you would be faced with hangs and loading indicators.

At first, we tried to improve the loading speed by improving the loading code itself. This got us close, but we still didn't have the performance we were after. In the end, close to submission, we updated our custom build step to split up the rooms into individual scenes by identifying which assets belong to what room. This reduced the amount of data that had to be streamed in and out by a great deal, because we were essentially loading in smaller increments.

Technical compliance

Next to fine-tuning, another large part of the work that needs to be done in the release candidate stage is fulfilling first-party certification requirements. These requirements are specified by the platform holders and are sometimes completely different from what you would need to consider for the average PC game.

There are many different kinds of requirements, and all of them cover different aspects of a game. Starting from ones that cover what exactly needs to be submitted to the platform holder for the game to be able to show up in the online stores, all the way to ones that go into details about interacting with platform features or making sure that the game can be updated at a later point. Certain platforms also have their own requirements that are specific to the hardware. One easy example is how a game needs to run on the Nintendo Switch in both handheld and docked modes.

Below are a couple of stories about these technical requirements and how it can be quite tricky to implement them into a game that didn't consider them from the outset.

- Suspend and resume

By Adrian Zdanowicz

Suspend and resume is a feature on Xbox One consoles, allowing the user to pause and minimize the game at any given moment. The user is given an option to keep the game running in the background while they use either the OS dashboard or any non-game application (such as the Microsoft Store or a web browser), then resume where they left off.

This feature, however, is not controlled solely by the OS. The game needs to be aware of both suspend and resume, and it needs to meet a specific set of conditions for those actions to complete. If your game fails to comply, the OS shuts it down -- so in practice, it is unacceptable for the game not to support this feature properly.

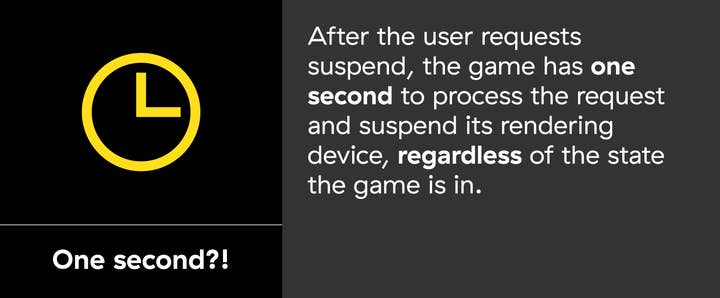

The biggest challenge in retrofitting this functionality to the existing game code is a stringent deadline imposed on the game by the OS. After the user requests suspend, the game has one second to process the request and suspend its rendering device, regardless of the state the game is in (loading screens, gameplay, cutscene, menus...). And that works the same when it comes to processing the resume request.

Depending on how the game logic is constructed, it can be extremely hard to guarantee a swift enough response while keeping thread safety in mind. This was a big challenge in one of the contemporary AAA titles we recently ported to Xbox One. The initial implementation was prone to obscure race conditions and kept failing on numerous edge cases, most notably when suspending during matchmaking. We had to put a significant amount of time into testing this single feature to catch any possible cases where suspend failed.

Even in games that are not heavily threaded, implementing suspend properly can pose a challenge. Both Hotline Miami games are relatively simple in comparison to other modern titles, but even there implementing a suspend functionality that never failed took several days. In this case, suspend would often time out and fail during loading. As it turned out, big parts of the loading routines were effectively big loops that never reached the OS message pump. Since the suspend and resume requests are both OS messages, we had to implement a functionality where any of those "long-running operations" still processed the messages in the background. This would allow the game to gracefully suspend the rendering device and proceed with the suspend process.

On top of everything, suspend is also relatively hard to debug. When the application is terminated by the OS due to missing the deadline, no additional information is provided to the developer, and it is impossible to set up a debugger to indicate that the suspension "would have timed out". It's also impossible to debug the process suspended from outside of the debugger. All those issues contribute to the difficulty of debugging because the developer can't rely much on the tools and instead has to thoroughly analyze the code, add additional logging, or just get lucky.

- HLM Hardware cursor

By Adrian Zdanowicz and Balázs Török

Google Stadia is one of those platforms where one can encounter some unexpected certification requirements. These are mostly related to it being a streaming platform, and since industry experience with such a platform is rare, there's usually something new around every corner.

As an example, in our Hotline Miami adaptation, we encountered one issue in a later stage of the process, but we will mention it here because it is normally handled in the RC phase.

Reducing the perceived latency is crucial for streaming platforms. To facilitate this, Stadia requires games to use a hardware cursor for the mouse if it is at all possible. For those who are not well versed in this term, a hardware cursor means that the game doesn't render the mouse cursor but tells the operating system to render it, usually providing an image or small animation (ever noticed how the cursor in Windows does not change its colour with different colour correction settings?).

The benefit of this is that the cursor can always closely follow the movement of the mouse, even if there are frame drops in the game. Different operating systems then provide different features to modify the behavior of this cursor as the game requires, for example, locking the cursor inside the game window on Windows.

Google Stadia is one of those platforms where one can encounter some unexpected certification requirements

This explanation already alludes to why Stadia requires the usage of the hardware cursor in the games. The latency of sending the mouse input to the server and then the game rendering the cursor and sending it back to the player would introduce considerable lag. That might be acceptable for players who live really close to the server and have a good connection but for others who don't, it might completely break the experience.

Hotline Miami and its sequel never supported a hardware cursor, which meant that our adaptation had to take this into consideration. Unfortunately, we realized this quite close to the deadline and therefore only had a few days to quickly refactor the game systems to make this work.

One issue we ran into is that the original game code was based on processing relative mouse coordinates. This mode is not supported by Stadia in combination with using a hardware cursor. Also, it would end up causing issues if the focus is lost from the Stadia window and then later regained with a different mouse position. So, we had to rewrite a considerable part of the cursor handling code, not only to switch off the rendering but also to handle absolute positions.

When all this was done, we realized that the issue was even more complicated. The cursor in Hotline Miami is not only rendered by the game but also animated, and there are some special states of the cursor showing possible interactions for the player. So, we needed to export these animations from the game data and use the platform functions to drive the animation frame by frame from the game. This way, we implemented the continuously expanding and contracting motion of the cursor and the special states. Unfortunately, supporting the way the cursor changes colors in the original game would have introduced a combinatorial explosion, and so we decided to make the cursor grayscale in the end.

Last minute bug-squashing

Finally, after all is said and done, the release candidate stage is also the last chance to fix any major issues before submitting the game. Below is one particularly tricky issue that we encountered recently, again in PixArk.

- PixARK Pink texture

By Balázs Török

Sometimes the release candidate stage can hide some really nasty surprises. This was the case with PixARK. But to understand this issue, first we have to mention that PixARK was made with a version of UE4 that did not support the Nintendo Switch platform. During the porting process, the decision was made not to update the engine because it was deemed too difficult. Instead, Switch support was surgically transplanted back from a later version. This was working quite well at this stage of the project, and the game was running with acceptable performance already, but there was one very strange issue happening.

Every few runs, the game would start with some texture mip levels being just pure magenta. Even though the game is quite colorful, this was very apparent, and it always seemed to happen right at the moment when the level loaded. It looked especially bad because moving closer to an object would completely change the color of said object as a different mip level would be sampled from the texture. Since the issue didn't happen every single time, it pointed to some kind of threading issue, possibly related to texture mip level streaming. With all these symptoms observed the hunt began to fix the issue.

Every few runs, the game would start with some texture mip levels being just pure magenta

Since there aren't any good tools for debugging such multithreading related issues, the first part of debugging was to very carefully read the code and see if we can notice anything bad. Unfortunately, the reason eluded multiple people, and we continued by trying to step through the code with a debugger. When this also failed to reveal anything, we took a couple of GPU captures and tried to see if there is anything special about the broken textures, but this also didn't point at anything specific. All the while, we had to run the game multiple times and hope that the issue manifests on the next load.

This whole process already took a couple of days with multiple people working on it. Finally, we decided to resort to the good old-fashioned solution of logging everything that happens in the texture streaming. The reason this solution was left as a last resort was that the logging itself can make the issue almost impossible to reproduce as it actually changes the timing. Fortunately, we were able to implement this in a way that we were still able to reproduce the bug.

We still needed to figure out what the issue is, and after several hours of pouring through logs from the game, we finally noticed that in some edge case, a mipmap could be flagged to be removed during the level loading process and later on when the level finished loading, it would start streaming in the higher mipmaps but end up throwing them away because of this flag. After we confirmed and fixed the issue, we found that the same fix was done in a newer version of UE4 than the one we used as a basis to reverse merge the Switch support.

The moral of the story is that in the release candidate phase of the project, there are frequently issues that take several days to debug, and one needs to properly account for this in the planning.

That's it for this part. We very much hope you enjoyed this deep dive into the release candidate step of the platform adaptation process. In the next and final article, we will talk about the remaining stages: the gold master and post launch support.

If you would like to find out any further information or just have a chat then please contact us here.

Authors: Ralph Egas (CEO), Erik Bastianen (CTO), Wilco Schroo (lead programmer), Coen Campman (lead programmer), Wouter van Dongen (lead programmer), Balázs Török (senior lead programmer), Eric Troost (programmer), Ross Martin (programmer), Adrian Zdanowicz (programmer), Savvas Lampoudis (senior game designer)

Follow the links below to read Abstraction Games' ongoing series about the black art of platform conversions: