How to place virtual objects in the real world convincingly

The team behind Angry Birds AR: Isle of Pigs shares its secrets for making augmented reality feel more like reality

Augmented reality has created an incredible opportunity for developers to bring video games into the real world for the first time. But as with any new opportunity, building for AR comes with a whole new set of challenges that few creators have faced before.

How do you create an experience that exists in 360 degrees around a player? How do you factor for in-room obstacles? What should you do to mirror real world lighting and color?

What sets AR games apart from everything else that's come before is the need to create a meaningful sense of immersion that blends both the real and virtual worlds together.

As the technical director for augmented and mixed Reality at Resolution Games, this is something I've had extensive experience with since the format emerged, and I've been fortunate enough to develop for every AR device of note so far. What I've learned is that AR immersion all comes down to a few key things.

Shadows

Every artist knows that shadows are crucial to creating a believable world, but how do you need to think about them differently when putting digital objects in a physical space? Ambient occlusion is a big piece of the puzzle -- it lets creators put a little blob shadow underneath any objects resting on the ground, making it so that the shadow isn't just attached to the object, but clearly connected to the surface on which it's cast.

Both static and dynamic objects can benefit from ambient occlusion, but the approach needs to be a little different for each.

Ambient occlusion is normally achieved automatically by baking lights or similar methods, but for using this effect with static objects, we built geometry around our props and that geometry became the ambient occlusion. So all of our static props have what we call "ambient occlusion skirts" around them. This way we can tweak the ambient occlusion to make it look exactly how we want in every situation.

For dynamic objects, we achieved ambient occlusion by drawing the level blocks from the bottom looking up with an orthogonal camera. This creates a depth buffer where the black indicates a shadow. The darker the black is, the closer to the ground that block is.

For example, we drew the shadow into a 32x32 pixel texture, which we then transformed into a 128x128 black and white texture with a simple blur. This creates a nice and soft ambient occlusion shadow. We then drew the 128x128 texture on a transparent quad on the ground plane.

Follow nature's lighting cues

Lighting is something that every game developer is familiar with, but few have had to contend with the realities of lighting in the real world and how to adjust your approach in light of these variable conditions.

When creating lighting for AR projects, you need to adjust the light intensity of virtual objects based on what's happening in the room in real-time. If someone is playing in a bright room, their game should look different than someone playing in a dark room. And as you can imagine with lighting, there are plenty of other factors to consider.

In Angry Birds AR: Isle of Pigs, we used two lights in our setup: a directional light and an ambient light. ARKit gives us a light estimation value between 0 and 1, but before we give this to the lights in the scene, we tweak this estimation slightly to get the precise light intensities we want. We also adjust the color of the lights, both ambient and directional, based on the light temperature estimation from the device.

One of the other lighting tricks worth considering is using the environment texture produced by ARKit as a skybox to affect the lighting in your scene, enabling colors that feel reflected in the environment to appear instead of having objects displaying an unnatural color that can stick out like a sore thumb.

A word of warning, this can be fairly tricky to implement because sometimes an environment can be very bright or very dark. So while this technique can be used to great effect, it also comes with its own set of challenges.

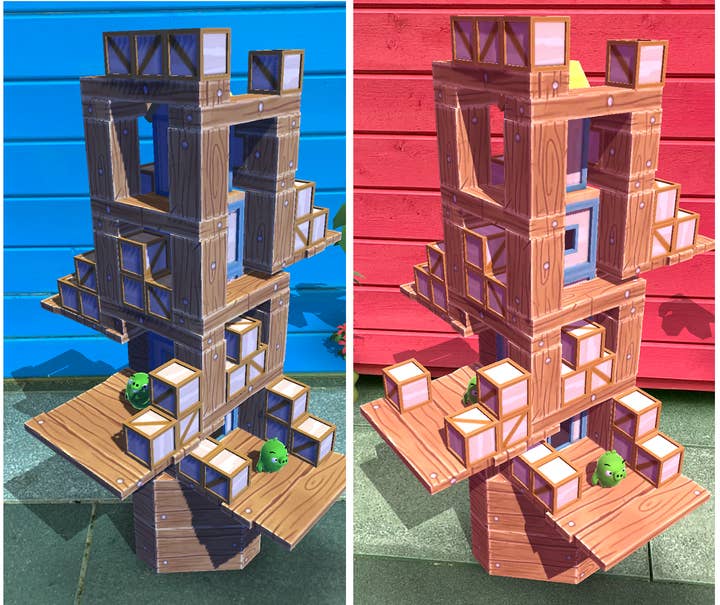

Add a dash of color to the room

Color plays an integral part in how you present your game, but how the colors of your objects mix with the colors of the real world can easily be overlooked.

By altering the video feed to tint the real world in a way that appropriately matches your scene, you can achieve an approach to color that prevents any discomfort or vibe-breaking experiences. It's a simple solution that feels more in the realm of photo-sharing apps than game development, but there's no denying that color filters work wonders for improving immersion.

In the example above from Angry Birds AR: Isle of Pigs, the video feed is provided to the game as two textures, both in the YCbCr color space. There is then a shader that converts from YCbCr to RGB, and it is this shader we modified to achieve this effect.

The conversion takes the YCbCr color as a Vector4, multiplies that with a 4x4 matrix and then the resulting vector is the RGB color.

Augment the real world

Setting tone with color is one thing, but what about managing the real-world objects that are in your scene simply by way of existing? You can use the very same video feed to modify those objects using a refraction shader.

Start by rendering the video feed as a background. Then render all opaque objects and grab the render buffer to use as a texture. Now use that render buffer texture as an input into your refraction shader.

In the video above, take a look at the top ice block and watch what happens as it passes in front of a houseplant. The plant, too, is refracted! AR isn't just about putting virtual objects into real environments -- it's about modifying the real world around us, too. And the more you can do that, the more believable your AR project will be.

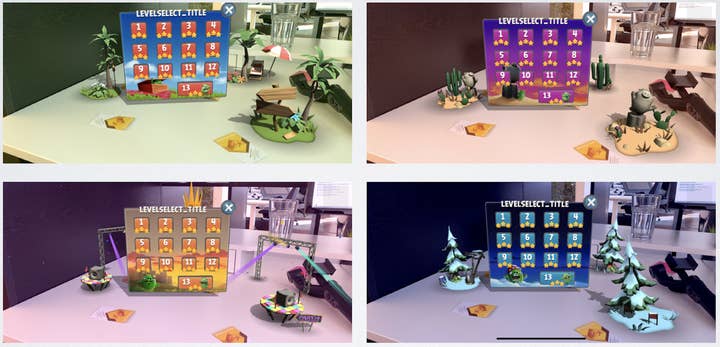

Create spatial awareness with particles

Few things create a sense of immersion in AR quite as well as making players feel like there are things they need to explore just off-camera. Particles can achieve this feeling very effectively. Think about snowflakes falling in the room, or stardust falling from the night sky.

Elements like these create a very immediate effect on the player, making them want to move around to see more -- almost as if they could catch them.

Don't forget: the real world is an obstacle (and that's a good thing)

Few things break a great AR experience faster than having objects that don't behave as they would in the real world. If you move your device so that a piece of furniture is blocking the scene below, it really needs to block the scene below. It all comes down to one word: occlusion.

Occluder surfaces are the way you can hide a virtual object behind or beneath a real one. You won't always want to do this (after all, you may want your game to be played on a table rather than hidden under it), but if a user's device can scan for real world surfaces and detect depth, you can use that depth as a buffer to mask anything in the real world.

This isn't something that was possible at the dawn of AR gaming, but more and more devices have these capabilities, and you should be leveraging them every chance you get.

And the technology around augmented reality is constantly evolving. With phone-tethered glasses on the horizon, and new development kits from the likes of Qualcomm and Niantic on the way, there will be plenty of new ways to build immersive experiences that jump right into the room.

But no matter where the technology takes us, the tips above should provide a great grounding for creating AR games that players can enjoy, experience, and fully immerse themselves in.

Magnus Runesson is the technical director for augmented and mixed reality at Resolution Games. The company has recently announced the formation of a dedicated division focused on AR projects.