Tech Focus: Olde Worlde Rendering

How game developers are using decades and centuries old techniques to make modern games better.

Video games are a relatively modern part of human history; as such they ride upon the wave of cutting-edge technological development. Many of the rendering techniques used in games are passed down directly from the film and TV visual effects industries where there is the relative luxury of being able to spend minutes or even hours rendering a single frame, whereas in a game engine there are only milliseconds available. It's thanks to the ever faster pace of processing hardware that these techniques become viable for real-time game rendering.

However, it may surprise you to learn that some of the algorithms used in modern game engines have a longer history and a very different origin than you might think. Some originate in cartography; others from engineering or were simply pieces of maths that had no practical use until recent times. As amazing as it may sound, many crucial 3D rendering techniques - including those being introduced into today's state-of-the-art game engines - can be based on mathematical concepts and techniques that were devised many hundreds of years ago.

As amazing as it may sound, many crucial 3D rendering techniques - including those being introduced into today's state-of-the-art game engines - can be based on mathematical concepts and techniques that were devised many hundreds of years ago.

Here, we present several examples that pre-date our industry but are now incredibly valuable in the latest games. The history and the people who discovered the algorithms will all be revealed: it's a fascinating insight into the ingenuity of modern games developers, re-purposing existing knowledge and techniques in order to advance the science of game-making.

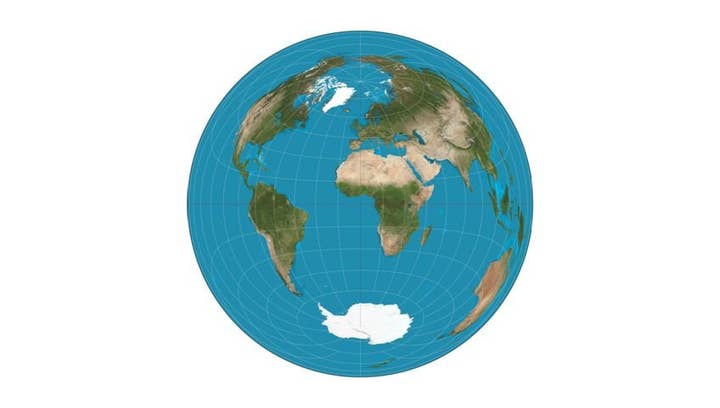

Lambert Azimuthal Equal-Area Projection

What is it? It sounds like a mouthful, but this is a neat trick for projecting a 3D direction (or a point on a sphere) into a flat 2D space. It was invented in 1772 by the Swiss mathematician Johann Heinrich Lambert as a method of plotting the surface of the spherical Earth onto a flat map.

How does this apply to game rendering? In a deferred engine, you first render various attributes of the meshes to what are called g-buffers (geometry buffers) before calculating lighting using that information. One of the most common things to store in a g-buffer is the normal vector which is a direction pointing directly away from the geometry surface for each pixel. The normal vector would usually be stored with an x, y and z coordinate using up three channels of a g-buffer, but you can accurately compress this information down to two channels with Lambert Azimuthal equal-area projection.

The reason this projection is particularly useful compared to others is that it encodes normal vectors that face towards the camera quite accurately, with this accuracy gradually reducing as the normal begins to point away from the camera. This is perfect for game rendering as most of the normal vectors for objects on the screen will point towards the camera or only very slightly away.

The benefits of doing a 2D projection rather than simply storing the normal vector in x, y, z form are that it saves a g-buffer channel which can either be used to save bandwidth and storage requirements, or can be used to store some other useful information about the meshes that will be used in the lighting pass. This technique is used by several modern game engines, including the new CryEngine 3 technology that powered the recent state-of-the-art multi-format shooter, Crysis 2.

Lambertian Reflectance (or Lambert's Cosine Law)

What is it? How is it used in games? Johann Heinrich Lambert was a busy fellow. There are other ideas of his that are commonly used in game rendering. One of the most widespread algorithms used is Lambertian Reflectance, which is used to determine the brightness of diffuse light reflecting off a surface and was first published way back in 1760.

If you'll recall some trigonometry from school, the brightness of diffuse light is proportional to the cosine of the angle between the light source and the surface normal. What this means is that the cosine is equal to one when the angle is zero, so the surface will appear bright when the object is facing the light source.

This cosine goes down towards zero as the angle goes up to 90 degrees, so therefore the brightness of diffuse light goes down in the same fashion. The fact that he worked this out in the 18th century simply from observations of the real world and with no computers to create rendered images to test his theory against is nothing short of astounding.