Modulate: how AI-assisted voice moderation tools can help combat toxicity

Why Modulate’s voice-native moderation tool ToxMod is more beneficial than old forms of grief reporting

Voice moderation is a sensitive issue. Players expect privacy, but long gone are the halcyon days of early, friendly online gaming. Today, when players interact with strangers in online games it can all too often lead to toxic behaviour. Striking the balance between player privacy and safety for online communities is the challenge facing games studios today.

Boston-based start-up Modulate wants to help game companies clean up toxic behaviour in their games with machine learning-based tools that promise to empower moderators and protect players.

Modulate CEO Mike Pappas told GamesIndustry.biz why its voice-native moderation tool ToxMod is more beneficial than old forms of grief reporting, why studios should build effective codes of conduct amid changing online safety regulations and how their technology and guidance is helping to make online communities safer.

ToxMod: machine-assisted proactive reporting vs user-generated reporting

User-initiated incident reports for bad behaviour have been standard for many years – just this summer, Xbox rolled out new voice reporting features for its platforms. But Pappas says game studios cannot rely on this method alone.

“User reports are a really important part of the overall trust and safety strategy. You have to give your users ownership,” he says.

“But you can’t just rely on that channel. A lot of users have had bad experiences with user reporting systems in the past, where they feel like their reports go into a black box, that they don’t have the tools to submit, and they don’t know if anything they reported is actually getting addressed.”

Submitting a report often takes players out of the action when playing a game or chatting in a social group. And this plays a part in Modulate’s assertion that user reports only account for anywhere from 10 to 30% of the bad behaviour in studios’ games. This is what the AI-assisted ToxMod, Modulate’s voice-native and comprehensive moderation tool, aims to improve.

“There’s a lot of tools out there to just transcribe audio, but you lose so much valuable nuance from what’s actually being said. We’re not only looking at things like tone and emotion, but we can even look at behavioural characteristics,” says Pappas.

“So, if you join a group of people, say one thing and then everyone goes shocked silent for a second, that’s a warning sign. We can look at all of those kinds of indications.”

“Users still can report, but especially for the really harmful stuff, like child grooming or violent radicalisation, you often have a target that is not aware of what’s happening and not able to report it. So it was really important for us to be able to proactively bring those things to a studio’s attention.”

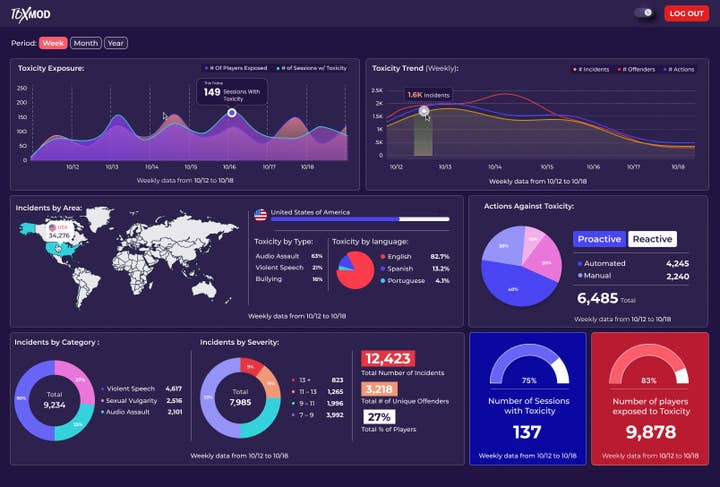

Modulate refers to ToxMod as being comprehensive and proactive. The machine learning technology works by “watching the entire voice chat ecosystem”. Then, with this closer understanding of user interactions, it is able to proactively flag potentially harmful incidents to human moderators with more accuracy than traditional systems.

For example, certain terms might be socially acceptable within a particular community. And in that situation, ToxMod should not raise any flags. However, if another party enters their chat and starts using the same terms in a derogatory manner, that’s when ToxMod will act. “We don’t make an immediate conclusion on a harmful statement. What we do is, we say: how is everyone else reacting? So it’s looking at these conversations in a full, complete context,” says Pappas.

The benefits of this technology are safer, more welcoming communities in gaming. With such things in mind, some of the studios that have already started using ToxMod include Among Us VR developer Schell Games, Rec Room, and Activision – and the latter launched Modulate’s technology in Call of Duty: Modern Warfare II and Warzone and will deploy ToxMod in the upcoming Call of Duty: Modern Warfare III.

Helping developers to create effective codes of conduct for their games

Modulate isn’t only focusing on developing its machine learning tools to help improve safety for players. With big changes coming to online safety regulations, such as the Digital Services Act (DSA) in the European Union, it is helping to guide developers and moderators so they can write policies that provide more players with their right to privacy while upholding new standards of transparency in communication.

“What you find is the vast majority of the ‘bad actors’ on your platform aren’t bad actors at all. There are people who are trying their best to engage in a positive way, but they just don’t understand what the rules are,” Pappas explains.

“So the point of a code of conduct is, in theory, to solve this problem, and make sure that when someone steps into one of these online spaces, they know what the norms are. So you don’t have these situations where well-intentioned users are making mistakes that lead to other users feeling offended, upset or excluded.”

This is a tough problem to solve, Pappas continues, because the vast majority of EULAs and privacy policies aren’t written to be clearly understood by a general audience. “It involves saying things like: ‘don’t harass users on this platform’. And now you have to define what harassment means and what does hate speech mean?”

"If you’re not being proactive then you’re only catching what your users report and you’re missing all of the most insidious stuff"

Typically, games studios have used heavy legalese to answer these questions, and while that may shield them legally, the majority of users have little understanding of the content. However, with recent regulatory changes – namely the EU’s DSA and the UK’s Online Safety Bill – Pappas points out that game studios will now have more pressure put on them to “communicate very clearly to your users in a way they can understand what is and isn’t acceptable”. (Read more from Modulate about these upcoming regulations.)

Privacy or safety?

What these new regulations are impacting is the tension between privacy and safety, Pappas says.

“In order to offer you safety on the platform, we need to be able to look at what’s happening on the platform. But that necessarily pulls a little against privacy. It’s not an all or nothing. And there’s a lot of cool solutions, like what we’ve built here at Modulate, that allow you to get a lot of safety at a very small privacy cost. But you can’t have perfect privacy and perfect safety – there needs to be a tension there,” he says.

He adds that studios need to communicate with users who’ve made a punishable offence, even though this can be difficult: “You need to be able to explain to the user why they were doing something bad. Usually, that should be you pointing to the code of conduct. If, for some reason, you can’t point to the code of conduct, then, at least when you message the user to let them know that you’re banning them, you need to say, ‘hey, here’s what we saw and here's why that’s unacceptable’. And you need to open those communication channels.”

When it comes to communication, nuance and context are central. These are things that Modulate has carefully considered with its technology. Technology that it passionately believes can combat toxicity in games and improve the safety of online communities. And, in Pappas words, “we don’t see ourselves as a vendor, we see ourselves as a partner”.

In closing, he says: “If you’re not being proactive, then you’re only catching what your users report and you’re missing all of the most insidious stuff. You’re not protecting children from exploitation, you’re not stopping violent radicalization, you’re just not going to be as impactful if you’re not being proactive.”

Text moderation is an option, but Pappas feels that choosing text and ignoring voice is also not a winning combination. Voice moderation is where it’s at: “Voice is just where we’re most human. It’s where our emotions are, it’s where we build relationships, and it’s where we get hurt more.

“The unique thing that Modulate is able to offer, because of the really sophisticated machine learning techniques we’ve been able to develop here, is that we can offer you that really comprehensive, detailed, context-rich understanding. And we can offer that at a price that doesn’t break the bank and allows you to actually use this technology at the scale it deserves.”

For more about ToxMod and how it can help your studio with voice moderation, visit Modulate’s website.